この1か月間でmachine learning, deep learingの燃料電池開発への応用について学ぶ。

Fundamentals, materials, and machine learning of polymer electrolyte membrane fuel cell technology

Yun Wang et al., Energy and AI 1 (2020) 100014

Machine learning and artificial intelligence (AI) have received increasing attention in material/energy development. This review also discusses their applications and potential in the development of fundamental knowledge and correlations, material selection and improvement, cell design and optimization, system control, power management, and monitoring of operation health for PEM fuel cells, along with main physics in PEM fuel cells for physics-informed machine learning.

4. Machine learning in PEMFC development

4.1. Machine learning overview

According to learning style, machine learning algorithms can be generally classified into three types: supervised learning(教師あり学習), unsupervised learning(教師なし学習), and reinforcement learning(強化学習), as shown in Table 9 .

Table 10 lists popular supervised learning algorithms and their characteristics.

Among many machine learning(機械学習) methods, the rapid development of deep learning(ディープラーニング) in recent years has pushed it to the forefront of the field of AI.

Deep learning is the ANN with deep structures or multi-hidden layers [229-232] .

It can achieve good performance with the support of big data and complex physics, and has a much simpler mathematical form than many traditional machine learning algorithms.

(分子や結晶の構成原子の3次元原子座標と原子番号から、第一原理計算結果によってエネルギーや電子分布やエネルギーバンド計算などを行い、構成原子の3次元座標と原子番号と、第一原理計算結果を教師データにして、ANNを学習させると、新たに構成原子の3次元座標と原子番号を、学習させたANNに入力すれば、エネルギーや電子分布やエネルギーバンド計算結果が、第一原理計算を行ったのと同等の正確さで、ANNから出力することができる。)

The relationship between AI, machine learning, and deep learning is shown in Fig. 2 , along with the number of US patent applications per year [20] .

We can expect that deep learning, such as physics-informed learning, will become the most important path to AI.

(画像分類や自然言語処理などに用いられるANNは、教師データによってゼロから学ぶことによって分類や翻訳の機能を習得するのだが、自然科学分野への応用においては、ANNに物理化学の基礎を追加することによって、ANNは物理化学の分野において、大型計算機を使った場合と同等以上の正確さで、かつ、非常に短時間で、結果を出力できるようになってきている。)

However, deep learning relies on big data, and thus traditional machine learning still have strong applications, especially for interdisciplinary studies, and can solve problems with reasonable amounts of data.

Many open-source machine learning frameworks have been developed and made available to the general public, including Scikit-Learn, Caffe2, H2O, PyTorch (for neural networks), TensorFlow (for neural networks), and Keras (for neural networks).

4.2. Machine learning for performance prediction

PEMFC performance is characterized by the polarization curve, also called the I-V curve, which is determined by a number of factors including fuel cell dimensions, material properties, operation conditions, and electrochemical/physical processes [233-236] .

Various physical models and experimental methods have been proposed to predict or di- rectly measure the I-V curve, which are reviewed by many other works [ 158 , 160 , 202 , 237 ].

As an alternative approach, machine learning is capable of establishing the relationship between inputs and output performance through proper training of existing data, as shown in Fig. 18 .

Mehrpooya et al. [233] experimentally constructed a database of PEMFC performance under various inlet humidity, temperature, and oxygen and hydrogen flow rates.

A two-hidden-layer ANN was then trained using the database to predict the performance under new conditions.

Total 460 points are contained in the database with 400 for training and 60 for testing, and R 2 of 0.982 (for the training) and 0.9723 (for the test) was achieved in their study.

(このレベルの内容では、手間がかかる割には、効果は少ない(小さい)と思う。)

Unlike physical models, the mapping between inputs and outputs constructed by machine learning models does not follow an actual physical process; thus, the machine learning approach is also called the blackbox model.

Machine learning has unique advantages in PEMFC modeling, which requires no prior knowledge, especially of the complex coupled transport and electrochemical processes occurring in PEMFC operation.

This significantly reduces the level of modeling difficulty and also makes it possible to take into account any processes in which the physical mechanisms are not yet known or formulated.

The machine learning method is also advantageous in terms of computational efficiency in the implementation process after proper training.

This characteristic makes machine learning potentially extremely important in the practical PEMFC applications which usually involve a large size multiple-cell system, dynamic variation, and long-term operation.

For a complex physical model that takes multi-physics into account, the computational and time costs are usually too high; a simplified physical model lacks of high prediction accuracy.

For even a small scale stack of 5–10 cells, physics model-based 3D simulation usually requires 10–100 million gridpoints and takes days or weeks for predicting one case of steady-state operation [ 158 , 160 , 241 ].

In this regard, machine learning could greatly help to broaden the application of complex physical models by leveraging on prediction accuracy and computational efficiency.

Using the simulation data from complex physical models to train a machine learning model is a popular approach, usually referred to as surrogate modeling.

A surrogate model can replace the complex physical model with similar prediction accuracy but higher computational efficiency.

Wang et al. [242] developed a 3D fuel cell model with a CL agglomerate sub-model to construct a database of the PEMFC performance with various CL compositions.

A data-driven surrogate model based on the SVM was then trained using the database, which exhibited comparable prediction capability to the original physical model with several-order higher computational efficiency.

It only took a second to predict an I-V curve using the surrogate model versus hundreds of processor-hours using the 3D physics-based model.

Owing to its computational efficiency of the surrogate model, the surrogate model, coupled with a generic algorithm (GA), is suitable for CL composition optimization.

Similarly, Khajeh-Hosseini-Dalasm et al. [243] combined a CL physical model and ANN to develop a surrogate model to predict the cathode CL performance and activation overpotential.

For fast prediction of the multi-physics state of PEM fuel cell, Wang et al. [244] developed a data-driven digital twinning frame work, as shown in Fig. 20 .

A database of temperature, gas reactant, and water content fields in a PEM fuel cell under various operating conditions was constructed using a 3D physical model.

Both ANN and SVM were used to solve the multi-physics data with spatial distribution characteristics.

The data-driven digital twinning framework mirrored the distribution characteristics of multi-physics fields, and ANN and SVM exhibited different prediction performances on different physics fields.

There is a great potential to improve the current two-phase models (e.g. the two-fluid and mixture approaches) of PEM fuel cells by using AI technology, for example, machine learning analysis of visualization data and VOF/LBM simulation results.

可視化データの機械学習分析やVOF / LBMシミュレーション結果などのAIテクノロジーを使用することで、PEM燃料電池の現在の2相モデル(2流体および混合アプローチなど)を改善する大きな可能性がある。

Physics-informed neural networks were recently proposed by Raissi et al. [174] , known as hidden fluid mechanics (HFM), to encode the Navier-Stokes (NS) equation into deep learning for analyzing fluid flow images, as shown in Fig. 21 .

Raissiらは、Navier-Stokesの式をディープラーニングに組み込むことによって、流体の流れを可視化することを可能にした、物理情報(この場合はNavier-Stokesの式)に基づくニューラルネットワーク、hidden fluid mechanics (HFM)、を最近提案した。

Such a strategy can be extended to the deep learning of two-phase flow and fuel cell performance by incorporating relevant physics, such as the capillary pressure correlation, Darcy’s law, and the Butler-Volmer equation, into the neural networks.

このような戦略は、キャピラリー圧力相関、ダルシーの法則、バトラー・ボルマー方程式などの関連する物理学をニューラルネットワークに組み込むことにより、二層流と燃料電池の性能の深層学習に拡張できます。

Table 11 summarizes the main physics in each PEMFC component that deep learning can incorporate to effec- tively achieve the design targets.

表11は、ディープラーニングが設計目標を効果的に達成するために組み込むことができる各PEMFCコンポーネントの主な物理学をまとめたものです。

4.3. Machine learning for material selection

Machine learning is widely used in the chemistry and material communities to discover new material properties and develop next generation materials [245-247] .

Experimental measurement, characterization and theoretical calculation are main traditional methods to diagnose or predict the properties of a material, which are usually expensive in terms of cost, time, and computational resources.

Material properties are influenced by many intricate factors, which increases the difficulty level in the search for optimal material synthesis using only traditional methods.

Machine learning can assist in material selection and property prediction using existing databases, which is advantageous in taking into account unknown physics and greatly increasing the efficiency.

As example, in the catalyst design absorbate binding energy prediction by the empirical Sabatier principle is widely used for the optimization of activity in catalyst design ( Fig. 22 (a)) [247] .

To remove the empirical equation, a database of binding energy for different catalyst structures constructed by characterization or theoretical calculation is used to train a machine learning model, which shows a great efficiency in predicting the catalyst activity in a wide range to identify the optimal solution of the catalyst structure ( Fig. 22 (b)).

Owing to the great potentials of machine learning in chemistry and materials science, professional tools have been developed, along with universal machine learning frameworks, and numerous structure and property databases for molecules and solids can be easily accessed to model training.

Popular professional machine learning tools and databases are summarized in Table 12.

4.4. Machine learning for durability

A durable and stable PEM fuel cell that is reliable for the entire life of the system is crucial for its commercialization.

Thus, it is important to predict the state of health (SoH), the remaining useful life (RUL), and durability of PEM fuel cell using the data generated from monitoring units.

The cell voltage is the most important indicator of fuel cell performance and thus is a popular output parameter in the machine learning.

In recent years, machine learning has been employed to predict fuel cell durability and SoH, which can generally be classified as model-based and data-driven approaches.

fuel cell + materials informaticsとfuel cell + deep learningでGoogle Scholarで検索した。

+ matrials informaticsでは、2020年以降の論文のタイトルに、materials informaticsが入っている論文は2件しか出てこなかった。どちらも著者は日本人である。

+ deep learningでは、10件以上あり、machine learningやreinforcement learningなども含めると30件くらいは出てくる。

自分の直観では、materials informaticsは探索手段の1つにmachine learningやdeep learningを取り込み、それにによってパワーアップされた結果、machine learningやdeep learningなどが、materials informaticsの主要部分として牽引しているように思う。

7月18日(日)

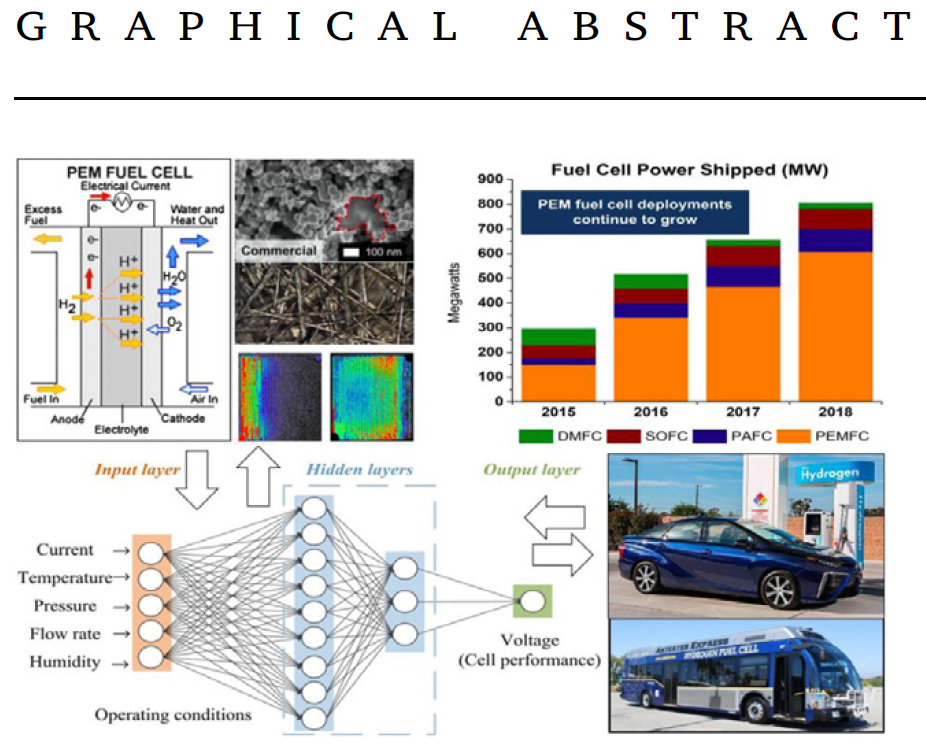

表1(a)には、4種類の燃料電池の出荷量の推移が示され、(b)にはPEMFC(polymer electrolyte membrane fuel cell)固体高分子形燃料電池の構成/構造図が示されている。

DMFC : Direct Methanol Fuel Cell, SOFC : Solid Oxide Fuel Cell, PAFC : Phospholic Acid Fuel Cell, PEMFC : Polymer Electrolyte Membrane Fuel Cell

セルの中央に高分子電解質膜、その左側にアノード触媒、右側にカソード触媒、さらに左側には水素の拡散層、右側には空気(酸素源)の拡散層がある。カソードの最外層を冷媒が流れる。アノード側で気体水素が水素イオンと電子に、カソード側で水素イオンと酸素と電子が水に変化する。

1.2. Current status and technical barriers

PEMFCの課題は、耐久性とコスト。

触媒層のコストとセルの耐久性は相反する。

1.3. Role of fundamentals, materials, and machine learning

7月20日(火)

4.2. Machine learning for performance prediction

引用文献[174]を見てみよう。

Hidden fluid mechanics: Learning velocity and pressure fields from flow visualizations

Maziar Raissi, Alireza Yazdani, and George Em Karniadakis, Science 367, 1026–1030 (2020)

For centuries, flow visualization has been the art of making fluid motion visible in physical and biological systems. Although such flow patterns can be, in principle, described by the Navier-Stokes equations, extracting the velocity and pressure fields directly from the images is challenging. We addressed this problem by developing hidden fluid mechanics (HFM), a physics-informed deep-learning framework capable of encoding the Navier-Stokes equations into the neural networks while being agnostic to

the geometry or the initial and boundary conditions. We demonstrate HFM for several physical and biomedical problems by extracting quantitative information for which direct measurements may not be possible. HFM is robust to low resolution and substantial noise in the observation data, which is important for potential applications.

何世紀にもわたって、流れの可視化は、物理的および生物学的システムで流体の動きを可視化する技術でした。 このような流れのパターンは、原則としてナビエ・ストークス方程式で表すことができますが、画像から直接速度場と圧力場を抽出することは困難です。 この問題に対処するために、物理学に基づいた深層学習フレームワークである隠れ流体力学(HFM)を開発しました。これは、ジオメトリや初期条件や境界条件に依存せずに、ナビエ-ストークス方程式をニューラルネットワークにエンコードできます。 直接測定が不可能な可能性のある定量的情報を抽出することにより、いくつかの物理的および生物医学的問題に対するHFMを示します。 HFMは、観測データの低解像度とかなりのノイズに対してロバストです。これは、潜在的なアプリケーションにとって重要です。by Google翻訳

We developed an alternative approach, which we call hidden fluid mechanics (HFM), that simultaneously exploits the information available in snapshots of flow visualizations and the NS equations, combined in the context of physicsinformed deep learning (5) by using automatic differentiation. In mathematics, statistics, and computer science—in particular, in machine learning and inverse problems—regularization is the process of adding information in order to prevent overfitting or to solve an ill-posed problem. The prior knowledge of the NS equations introduces important structure that effectively regularizes the minimization procedure in the training of neural networks. For example, using several snapshots of concentration fields (inspired by the drawings of da Vinci in Fig. 1A), we obtained quantitatively the velocity and pressure fields (Fig. 1, B to D).

自動微分を使用して、流れの可視化とNS方程式のスナップショットで利用可能な情報を、物理情報に基づく深層学習(5)のコンテキストで組み合わせて同時に活用する、隠れ流体力学(HFM)と呼ばれる代替アプローチを開発しました。 数学、統計学、コンピューターサイエンス、特に機械学習と逆問題では、正則化とは、過剰適合を防止したり、不適切な問題を解決したりするために情報を追加するプロセスです。 NS方程式の予備知識は、ニューラルネットワークのトレーニングにおける最小化手順を効果的に正規化する重要な構造を導入します。 たとえば、濃度場のいくつかのスナップショット(図1Aのダヴィンチの図に触発された)を使用して、速度場と圧力場を定量的に取得しました(図1、BからD)。by Gppgle翻訳

7月28日(水)

基礎知識:

ムーアの新物理化学:W. J. MOORE著 藤代亮一訳:

8章 化学反応速度論

37.活性化吸着

吸着が起こるまでに乗り越さねばならないポテンシャルエネルギーの障壁はしばしば小さいかまたは無視される程度であるから、吸着の速度は裸の表面に気体の送り込まれる速さによって支配される。しかしながら、吸着にかなりの活性化エネルギーEadを必要とする場合があり、そのときの吸着速度(A*exp(-Ead/RT)は充分小さくなって表面反応の全体の速度がこれによって決定されるようになるだろう。このようにかなりの活性化エネルギーを要する吸着は活性化吸着とよばれる。

一般に金属表面上の気体の化学吸着にはあまり活性化エネルギーを必要とはしない。J. K. Robertsは、注意してきれいにした金属線上への水素の吸着は約25°Kでさえも速やかに進行し、強く水素原子の吸着された単分子層(単原子層)を作ることを示した。このときの吸着熱は、金属の水素化物の共有結合を作るのに要する熱量に近い。

・・・これと異なる挙動を示す一つの重要な例外は400℃における鉄触媒上への窒素の吸着である。この吸着はおそい活性化吸着で、この触媒を用いるアンモニア合成反応の律速段階であるように思われる。・・・

38.触媒の被毒

触媒はごく少量の異物によって被毒作用をうける。Faradayは、H2とO2の結合反応の触媒として用いる白金は、きれいで脂のついていないもので、また反応気体は一酸化炭素を含んでいてはならないと強調した。SO2をSO3に酸化する白金の非常に有効な触媒作用については19世紀の初めによく知られていたが、触媒がすぐにその活性を失うため、興行的には用いられなかった。高度に純粋にした反応気体、すなわち、イオウとヒ素化合物を除いた反応気体が得られて初めて長時間にわたって反応を続けさせることができた。

CO、H2S、ヒ素化合物のような触媒毒が強い生理学的毒物でもあることは、偶然の一致ではない。これらが動物に毒作用を呈する理由は、生存に必要な生化学反応を促進する酵素の被毒によって、その生化学反応が禁止されるからである。

触媒毒と反応物は有効な触媒表面のとり合いをする。・・・。ここで触媒の失活の程度は、毒によって占められた表面の割合と定量的に対応するだろうかという重要な疑問が生ずる。これはある場合には正しいが、少量の毒によってその表面積効果だけからは説明できないほど大きな阻害を受ける場合もよく知られている。

39.触媒表面の性質

巨視的には滑らかな固体表面でも10Åの単位では凹凸がある。最も優れた光学的技術によってへき開した結晶面を調べると、それが階段状の表面であることがわかる。金属からの光電子放射や熱イオン放射の実験を行うと、表面はいろいろ異なった仕事関数をもった部分からできていることがわかる。・・・。また結晶の稜や角、粒子と粒子の境界その他表面の物理的な不規則性は、異常に高い触媒作用をもつ活性中心になると考えられる。

7月29日(木)

元の論文に戻ろう。

Fundamentals, materials, and machine learning of polymer electrolyte membrane fuel cell technology, Yun Wang et al., Energy and AI 1 (2020) 100014

Hydrogen Oxydation Reaction (HOR) :

アノード触媒層中では、水素ガス(水素分子)が酸化(電子を失う)され、水素イオン(H+)と電子が生じる。

HORによって発生した水素イオン(H+)は、高分子電解質(Polymer Electrolyte Material:PEM)層中を移動し、カソード触媒層中に移動する。

Oxygen Reduction Reaction (ORR) :

カソード触媒層中では、酸素ガス(酸素分子)が水素イオン(H+)によって還元(電子を得る、水素と化合する)され、水(H2O)を生じる。

上図の左側から順に、水素ガス拡散層、アノード触媒層、高分子電解質層、カソード触媒層、酸素ガス拡散層、が積層されている。

3. Fundamentals and materials

For the hydrogen oxidation reaction (HOR) and oxygen reduction reaction (ORR) to proceed efficiently, the materials used in fuel cells must be chosen so that a high beginning of life performance and durability are ensured.

For example, to improve the activation and reduce transport losses, various issues as discussed earlier need to be addressed, including durable electrocatalyst and its loading reduction [2] , reactant/membrane contamination [ 91 , 92 ], water management [ 93 , 94 ], and degradation [ 95 , 96 ].

Material advance and improvement are therefore important for fuel cell R&D, and fundamentals that establish the material properties and fuel cell performance under various operation conditions are highly needed.

3.1. Materials

3.1.1. Membrane

The PEM is located between the anode and cathode CLs.

Its main functions are two-fold:

(i) it acts as a separator between the anode and the cathode reactant gasses and electrons, and

(ii) it conducts protons from the anode to cathode CLs.

Therefore, as a separator it must be impermeable to gasses (i.e., it should not allow the crossover of hydrogen and oxygen) and must be electrically insulating.

In addition, the membrane material must withstand the harsh operating conditions of PEM fuel cells, and thus possess high chemical and mechanical stability [97] .

これは、Nafion XLのSEM像

8月4日(水)

3.1.2. Catalyst layers

Catalyst layers (CLs) are the component where the electrochemical reactions occur.

触媒層は、電気化学反応が生じる場である。

The CL material must provide continuous pathways for various reactant species; primarily,

(i) a path for proton transport,

水素イオンの移動

(ii) a pore network for gaseous reactant supply and water removal, and

水素ガスと酸素ガスの供給、水の排出

(iii) a passage for electron conduction between the CL and the current collector.

触媒層と集電体層の間の電子(電荷)の移動

The CL material is a major factor affecting fuel cell performance and durability.

Conventional CLs are composed of electrocatalyst, carbon support, ionomer, and void space.

従来型の触媒層は、電極触媒、炭素支持体、アイオノマー、及び、空隙からなる。

Optimization of the CL ink preparation has been the main driver in PEMFC development [ 21 , 102 ].

This breakthrough highlights the importance of the so-called triple-phase boundaries of the ionomer, Pt/C, and void space so that all reactants could access for the reactions.

Conventional CLs are prepared based on the dispersion of a catalyst ink comprising a Pt/C catalyst, ionomer, and solvent.

従来型の触媒層は、Pt/C触媒とアイオノマーと溶媒からなる触媒インクの分散(体)をベースに調整(調製)される。

Ink composition is important for aggregation of the ionomer and agglomeration of carbon particles, and the dispersion medium governs the ink’s properties, such as the aggregation dimension of the catalyst/ionomer particles, viscosity, and rate of solidification, and ultimately, the electrochemical and transport properties of the CLs [103-105] .

The ionomer not only acts as a binder for the Pt/C particles but also proton conductor.

アイオノマーは、Pt/Cのバインダーとしてだけでなくプロトン導電体としての機能も有する

Imbalance in the ionomer loading increases the transport or ohmic loss, with a small amount of ionomer reducing the proton conductivity and a large amount increasing the transport resistance of gaseous reactants.

アイオノマーが少ないと、イオン電導性が下がり、アイオノマーが多いと、(空隙率が下がるため)、気体反応物の輸送特性が低下する。

8月5日(木)

Understanding inks for porous-electrode formation

Kelsey B. Hatzell, Marm B. Dixit, Sarah A. Berlinger and Adam Z. Weber J. Mater. Chem. A, 5, 20527 (2017)

Scalable manufacturing of high-aspect-ratio multi-material electrodes are important for advanced energy storage and conversion systems. Such technologies often rely on solution-based processing methods where the active material is dispersed in a colloidal ink. To date, ink formulation has primarily focused on macro-scale process-specific optimization (i.e. viscosity and surface/interfacial tension), and been optimized mainly empirically. Thus, there is a further need to understand nano- and mesoscale interactions and how they can be engineered for controlled macroscale properties and structures related to performance, durability, and material utilization in electrochemical systems.

高アスペクト比のマルチマテリアル電極のスケーラブルな製造は、高度なエネルギー貯蔵および変換システムにとって重要です。 このような技術は、多くの場合、活物質がコロイドインクに分散される溶液ベースの処理方法に依存しています。 これまで、インクの配合は主にマクロスケールのプロセス固有の最適化(つまり、粘度と表面/界面張力)に焦点を当てており、主に経験的に最適化されてきました。 したがって、ナノスケールとメソスケールの相互作用、および電気化学システムの性能、耐久性、材料利用に関連する制御されたマクロスケールの特性と構造のためにそれらをどのように設計できるかをさらに理解する必要があります。by Google翻訳

In summary, there is a growing need for fabricating porous electrodes with unprecedented control of layer composition. Key to this is knowledge of the underlying physics and phenomena going from multicomponent dispersions and inks to casting/processing to 3D structure. While there has been some recent work as highlighted herein, a great deal remains to be accomplished in order to inform predictive and not empirical optimizations. Such investigations have occurred in other fields such as semiconductors and coatings and dispersions in general, but this has not been translated to thin-film properties and functional layers as occur in electrochemical devices. Overall, ink engineering is an exciting opportunity to achieve next-generation composite materials, but requires systematic studies to elucidate design rules and metrics and identify controlling parameters and phenomena.

要約すると、層組成の前例のない制御を備えた多孔質電極を製造する必要性が高まっている。これの鍵は、多成分分散液やインクからキャスティング/プロセッシング、3D構造に至るまでの基礎となる物理学と現象に関する知識です。ここで強調されているように最近の作業がいくつかありますが、経験的な最適化ではなく予測的な最適化を通知するために、多くのことを達成する必要があります。このような調査は、一般に半導体やコーティング、分散液などの他の分野で行われていますが、これは電気化学デバイスで行われるような薄膜特性や機能層には変換されていません。全体として、インクエンジニアリングは次世代の複合材料を実現するための刺激的な機会ですが、設計ルールと測定基準を解明し、制御パラメーターと現象を特定するための体系的な研究が必要です。by google翻訳

元の論文に戻ろう。

Fundamentals, materials, and machine learning of polymer electrolyte membrane fuel cell technology, Yun Wang et al., Energy and AI 1 (2020) 100014

In contrast, non-conventional CLs are structured such that one of the major ingredients in their conventional counterparts is eliminated [ 2 , 102 ].

Nanostructured thin film (NSTF) CLs from 3 M are the most successful nonconventional CL.

They consist of whiskers where the catalyst is deposited without ionomer for proton conduction.

Over the years, they have proven to provide a higher activity than conventional CLs, as seen in Fig. 5 .

In addition, similar to conventional CLs, annealing can be used to change the CL structure and ultimately change its activity.

Fig. 5. Schematic illustration and corresponding HRTEM images of the mesoscale ordering during annealing and formation of the mesostructured thin film starting from the as-deposited Pt–Ni on whiskers (A), annealed at 300 °C (B) and 400 °C (C). Specific activities of Pt–Ni NSTF as compared to those of polycrystalline Pt and Pt-NSTF at 0.9 V (D) [106] .

[106] van der Vliet DF , Wang C , Tripkovic D , et al. Mesostructured thin films as electrocatalysts with tunable composition and surface morphology. Nat Mater 2012;11:1051–8 .

8月10日(火):ペースアップ

Carbon is the most commonly used support material for catalyst because of its low cost, chemical stability, high surface area, and affinity for metallic nanoparticles.

The surface area of the support varies depending on its graphitization process and is reported to range from 10 to 2000 m 2 /g [107] .

Ketjen Black and Vulcan XC-72 are popular carbons with a surface area of 890 m 2 /g and 228 m 2 /g, respectively [108] .

Carbon tends to aggregate, forming carbon particle agglomerates with a bimodal pore size distribution (PSD).

This PSD is usually composed of the primary pores of typically 2–20 nm in size and sec- ondary pores larger than 20 nm.

The primary pores are located between carbon particles in an agglomerate, while the secondary pores are between agglomerates.

Depending on the Pt distribution and utilization within an agglomerate, the primary pores play a key role in determining the electrochemical kinetics, while the secondary pores are important for reactant transport across a CL.

The portion of the primary and secondary pores is largely determined by the surface area of the carbon support [108] .

Hence, it has been reported that carbon supports also determine the optimal ionomer content and the Pt distribution in CLs [ 109 , 110 ].

Additionally, the anode overpotential is usually considered negligible in comparison with its cathode counterpart because of the sluggish ORR.

Thus, most work in the literature is focused on cathode CLs.

CL optimization is focused on not only enhanced durability but also reduction of the Pt loading.

For this purpose, it is crucial to determine the optimal combination of the carbon support and catalyst for loading reduction.

An example is highlighted in Fig. 6 , where different carbons are heat-treated to induce the catalytic activities of PANI- derived catalysts and to ensure their performance and stability.

Rotating Ring-Disk Electrode (RDE) measurements were conducted to study the ORR activity of various heat-treated PANI-C catalysts as a function of temperature.

The durability and stability of CL material are a major subject in R&D, which is related to multiple factors, mainly including (i) operating and environmental conditions, (ii) oxidant and fuel impurities, and (iii) contaminants and corrosion in cell components.

For instance, operation under high voltages (above 1.35 V), which may occur during fuel cell startup and shut-down, can lead to Pt dissolution [112] .

Operation further above this voltage will cause degradation of the carbon support, known as carbon corrosion.

In addition, any traces of a contaminant in the fuel or oxidant feeds can lead to a decrease in fuel cell performance by poisoning CL materials [ 113 , 114 ].

Some contaminants cover the Pt catalyst and then reduce the electrochemical surface area (ECSA) available for the reaction.

This catalytic contamination is usually reversible upon removal of the contaminants.

In certain instances, contaminants such as ammonia will cause irreversible degradation under adequate exposure time and concentration [44] .

Further, cell components, such as CLs and BPs, may contain contaminants, from their manufacturing process and/or material used, which eventually leach out and cause poi- soning of the MEA.

This may include membrane poisoning by metallic cations [91] .

Up to date, Pt is the electrocatalyst of choice for the ORR in PEM fuel cells because of its high activity.

However, Pt has a high cost associated with it and is currently mined in mainly several countries, such as South Africa and Russia.

Furthermore, high Pt loading is required to reach the target lifetime without major efficiency loss.

Using state-of-the-art methods, Pt catalyst is distributed in a way that does not allow its full utilization in CLs [ 115 , 116 ].

Alternative catalysts that are either Pt free or Pt alloys are under research.

Two excellent review papers on the topic are provided by Ref. [ 117 , 118 ].

A summary of some of these catalysts, their current status, and remaining challenges is provided in Fig. 7 .

Machine learning and AI are extremely helpful and highly demanding for CL development providing that CLs have been extensively studied for not only PEM fuel cells, but also many other systems, such as electrolyzers and sensors with Pt-catalyst electrodes.

The species transport equations, ORR reaction kinetics, two-phase flow, and degrada- tion mechanisms can be encoded into the neural networks for effective physics-informed deep learning to understand the impacts of catalyst materials on fuel cell performance/durability and optimize the pore size, PSD, PTFE loading, ionomer content, and carbon and electrocatalyst loading.

In the mass production phase, machine learning and AI can assist the quality control of CL composition in signal processing and element analysis when integrated with detection techniques such as Laser Induced Breakdown Spectroscopy (LIBS) [119] .

文献検索:keyword : fuel cell deep learning

F.-K. Wang et al.: Hybrid Method for Remaining Useful Life Prediction of PEMFC Stack

ABSTRACT

Proton exchange membrane fuel cell (PEMFC) is a clean and efficient alternative technology for transport applications. The degradation analysis of the PEFMC stack plays a vital role in electric vehicles. We propose a hybrid method based on a deep neural network model, which uses the Monte Carlo dropout approach called MC-DNN and a sparse autoencoder model to analyze the power degradation trend of the PEMFC stack. The sparse autoencoder can map high-dimensional data space to low-dimensional latent space and significantly reduce noise data. Under static and dynamic operating conditions, using two experimental PEMFC stack datasets the predictive performance of our proposed model is compared with some published models. The results show that the MC-DNN model is better than other models. Regarding the remaining useful life (RUL) prediction, the proposed model can obtain more accurate results under different training

lengths, and the relative error between 0.19% and 1.82%. In addition, the prediction interval of the predicted RUL is derived by using the MC dropout approach.

プロトン交換膜燃料電池(PEMFC)は、輸送用途向けのクリーンで効率的な代替技術です。 PEFMCスタックの劣化分析は、電気自動車で重要な役割を果たします。 MC-DNNと呼ばれるモンテカルロドロップアウトアプローチとスパースオートエンコーダモデルを使用してPEMFCスタックの電力劣化傾向を分析するディープニューラルネットワークモデルに基づくハイブリッド手法を提案します。スパースオートエンコーダは、高次元のデータ空間を低次元の潜在空間にマッピングし、ノイズデータを大幅に削減できます。静的および動的な動作条件下で、2つの実験的なPEMFCスタックデータセットを使用して、提案されたモデルの予測パフォーマンスがいくつかの公開されたモデルと比較されます。結果は、MC-DNNモデルが他のモデルよりも優れていることを示しています。残りの耐用年数(RUL)の予測に関して、提案されたモデルは、さまざまなトレーニングの長さ、および0.19%から1.82%の相対誤差の下でより正確な結果を得ることができます。さらに、予測されたRULの予測区間は、MCドロップアウトアプローチを使用して導出されます。by Google翻訳

IEEE PHM 2014 Data Challengeで使われたデータを用いているようである。

Y. Xie et al.: Novel DBN and ELM Based Performance Degradation Prediction Method for PEMFC

ABSTRACT

Lifetime and reliability seriously affect the applications of proton exchange membrane fuel cell (PEMFC). Performance degradation prediction of PEMFC is the basis for improving the lifetime and reliability of PEMFC. To overcome the lower prediction accuracy caused by uncertainty and nonlinearity characteristics of degradation voltage data, this article proposes a novel deep belief network (DBN) and extreme learning machine (ELM) based performance degradation prediction method for PEMFC. A DBN

based fuel cell degradation features extraction model is designed to extract high-quality degradation features in the original degradation data by layer-wise learning. To tackle the issues of overfitting and instability in fuel cell performance degradation prediction, an ELM with good generalization performance is introduced as a nonlinear prediction model, which can get some enhancement of prediction precision and reliability. Based

on the designed DBN-ELM model, the particle swarm optimization (PSO) algorithm is used in the model training process to optimize the basic network structure of DBN-ELM further to improve the prediction accuracy of the hybrid neural network. Finally, the proposed prediction method is experimentally validated by using actual data collected from the 5-cells PEMFC stack. The results demonstrate that the proposed approach always has better prediction performance compared with the existing conventional methods, whether in the cases of various training phase or the cases of multi-step-ahead prediction.

寿命と信頼性は、プロトン交換膜燃料電池(PEMFC)の用途に深刻な影響を及ぼします。 PEMFCの性能低下予測は、PEMFCの寿命と信頼性を向上させるための基礎です。劣化電圧データの不確実性と非線形特性によって引き起こされる低い予測精度を克服するために、この記事では、PEMFCの新しいディープビリーフネットワーク(DBN)とエクストリームラーニングマシン(ELM)ベースのパフォーマンス劣化予測方法を提案します。 DBNベースの燃料電池劣化特徴抽出モデルは、層ごとの学習によって元の劣化データから高品質の劣化特徴を抽出するように設計されています。燃料電池の性能劣化予測における過剰適合と不安定性の問題に取り組むために、優れた一般化性能を備えたELMが非線形予測モデルとして導入され、予測の精度と信頼性をある程度向上させることができます。設計されたDBN-ELMモデルに基づいて、粒子群最適化(PSO)アルゴリズムがモデルトレーニングプロセスで使用され、DBN-ELMの基本的なネットワーク構造をさらに最適化して、ハイブリッドニューラルネットワークの予測精度を向上させます。最後に、提案された予測方法は、5セルPEMFCスタックから収集された実際のデータを使用して実験的に検証されます。結果は、提案されたアプローチが、さまざまなトレーニングフェーズの場合でも、マルチステップアヘッド予測の場合でも、既存の従来の方法と比較して常に優れた予測パフォーマンスを持っていることを示しています。

by Google翻訳

I. INTRODUCTION

The proton exchange membrane fuel cells (PEMFC) have been taken as a potential power generation system for many fields, including electric vehicles, aerospace electronics, and

aircrafts [1], [2], due to its high conversion efficiency, low operation temperature, and clean reaction products [3], [4].

However, the fuel cell system is affected by multiple factors during operation, which reduces its reliability and shortens its lifetime [5].

Therefore, predicting the performance degradation can effectively indicate the health status of PEMFCs, which could provide a maintenance plan to reduce the failures and downtimes of PEMFCs, thereby extending their lifetime and increasing their reliability [6], [7].

The degradation prediction of PEMFCs can use the historical operating data, such as voltage, power, and impedance, to obtain early indications about fuel cell degradation trend and failure time [8].

The voltage drop is directly associated with failure modes and components aging of fuel cells, and it is also the easiest to obtain.

Thus, the voltage is commonly treated as the critical deterioration indicator reflecting the performance degradation of PEMFC [9], [10].

Current aging voltage prediction approaches can be grouped into two categories, model-based method, data-based method [11].

The model-based methods use the specific physical model or semi-empirical degradation model to provide the degradation estimation for the fuel cells.

However, their reliability is limited because the degradation mechanisms inside PEMFCs are still not fully understood [12].

Some other model-based methods use particle filter [13], Kalman filter [14], and their variants to estimate the health of PEMFC.

However, due to their limited nonlinear processing capabilities or low computational efficiency, they are difficult to describe the high nonlinearity and complexity of PEMFC aging processes.

Form a practical point of view, the data-based methods are more advantageous because they can represent the degradation features observed in the aging voltage data flexibly without any prior knowledge about the fuel cells [15].

Moreover, the data-based methods are easy to deploy, less computationally complex, and more suitable for practical online applications [8].

The existing different data-based methods can be divided into data analytics methods and machine learning methods.

Regression analysis approaches, such as autoregressive integrated moving average methods [15], locally weighted projection regression methods [16], and regime switch vector autoregressive methods [17], are some of the data analytics methods that have been adopted.

A large number of machine learning methods also achieve the great strides in PEMFC degradation prediction, including the support vector machine (SVM) based methods [18], relevance vector machine (RVM) based methods [19], Gaussian process state space based methods [20], back propagation neural network based methods [21], Echo State Network based methods [22], adaptive neuro-fuzzy inference system (ANFIS) based methods [23], extreme learning machine (ELM) based methods [24], and so on.

However, the above data-based methods build the prediction model without considering the degradation characteristics of the voltage data.

Thus they may not achieve better performance.

The actual data contain more fluctuations and noises, which limit the effectiveness of the regression analysis approaches.

Besides, some voltage recovery phenomena contained in the voltage degradation process of fuel cell exhibit the high nonlinear characteristics which cannot be fully extracted by these shallow neural networks mentioned in [21]–[24].

The general machine learning methods noted in [18]– [20] not only have the weak feature extraction ability but also are affected by many artificial determining factors such as their kernel functions construction [25].

Therefore, to improve the unsatisfactory prediction performance, the designed prediction method should be tightly integrated with data characteristics.

Furthermore, considering the weak feature extraction ability of shallow models, it is better to employ the deep learning architecture for PEMFC degradation prediction.

To overcome the above problems, a novel PEMFC performance degradation prediction model based on the deep belief network (DBN) and extreme learning machine (ELM) is proposed for the first time, which considers the statistical characteristics of original degradation data.

Deep Belief Network, as a deep learning method [26], has achieved state-of-the-art results on challenging modelling and regression problems for highly nonlinear statistical data.

DBN can learn high-quality and robust features from the data through multiple layers of nonlinear feature transformation [27], which achieves high precision recognition on handwritten digits [28] and facial expression [29].

It can also accurately describe the complex mapping relationships between inputs and features and has achieved state-of-the-art results on lifetime prediction problems of Multi-bearing [30], lithium batteries [31] and rotating components [32].

Thus, the DBN method with good feature extraction and expression abilities is adopted in this article to learn the deep PEMFC degradation features from a large number of voltages that contain too much noise and redundant data.

However, the DBN model may encounter the problems of the overfitting and local minima when using the gradient-based learning algorithm to obtain network parameters.

The ELM method with good generalization and universal approximation capability [33] is introduced to solve these limitations.

In the proposed DBN-ELM model, ELM services as a supervised regressor on the top layer to obtain the solutions directly without such trivial issues [34].

Furthermore, the ELM regressor can employ the deep feature provided by DBN to obtain a relatively stable prediction performance, which can avoid the ill-posed problems [35] in common ELM caused by data statistical characteristics [36] and the initialization mode [37].

In short, the proposed DBN-ELM method employs the DBN to extract high-quality degradation features and generate a relatively stable feature space which is, in turn, fed into an ELM to perform PEMFC degradation voltage prediction.

The propose d novel prediction model combines the excellent feature learning ability of DBN and generalization performance of ELM, which aims to enhance PEMFC degradation prediction performance.

Furthermore, to further improve the prediction accuracy, the particle swarm optimization (PSO) algorithm as the optimization tool is adopted into the design of the DBN-ELM model.

The PSO algorithm with the advantages of fast search speed, simple structure, and good memory ability [23] is widely used to optimize the structure [38]–[40] and parameters [23], [41], [42] of neuralnetworks (NN).

Thus, this article uses the PSO algorithm with time-varying inertia weight [43] to adjust the structural parameters of the DBN-ELM and improve prediction accuracy.

Finally, the proposed DBN-ELM method is verified by different case studies on a 1kW PEMFC experimental platform.

The novelty and contributions of this article can be summarized as follows:

• The degradation characteristics of the experimental voltage data are firstly analyzed, which guides the tailored design of the high-performance prediction model.

• The DBN method is originally applied to the PEMFC performance degradation prediction for high-level degradation features extraction and learning.

• The novel DBN-ELM method can accurately infer future voltage degradation changes of the PEMFC stack.

• The PSO algorithm is introduced into the design of the proposed DBN-ELM prediction model to further improve the performance of PEMFC degradation prediction.

• Experimental results demonstrate the accuracy and generalization performance of the proposed method in PEMFC degradation prediction.

この論文でも、使っているデータはIEEE PHM 2014 Data Challengeのものであり、Kaggleのコンペでスコア争いをしているのと変わらない。

用意されたデータセットに対して良いスコアが出ても、実際の開発現場で使えるかどうかわからない。どう使うのだろうか。

触媒層のTEM観察が気になったので文献を調べてみた。

Testing fuel cell catalysts under more realistic reaction conditions: accelerated stress tests in a gas diffusion electrode setup

Shima Alinejad et al., J. Phys.: Energy 2 (2020) 024003

Abstract

Gas diffusion electrode (GDE) setups have very recently received increasing attention as a fast and straightforward tool for testing the oxygen reduction reaction (ORR) activity of surface area proton exchange membrane fuel cell (PEMFC) catalysts under more realistic reaction conditions. In the work presented here, we demonstrate that our recently introduced GDE setup is suitable for benchmarking the stability of PEMFC catalysts as well. Based on the obtained results, it is argued that the GDE setup offers inherent advantages for accelerated degradation tests (ADT) over classical three-electrode setups using liquid electrolytes. Instead of the solid–liquid electrolyte interface in classical electrochemical cells, in the GDE setup a realistic three-phase boundary of (humidified) reactant gas, proton exchange polymer (e.g. Nafion) and the electrocatalyst is formed. Therefore, the GDE setup not only allows accurate potential control but also independent control over the reactant atmosphere, humidity and temperature. In addition, the identical location transmission electron microscopy (IL-TEM) technique can easily be adopted into the setup, enabling a combination of benchmarking with mechanistic studies.

ガス拡散電極(GDE)のセットアップは、より現実的な反応条件下で表面積プロトン交換膜燃料電池(PEMFC)触媒の酸素還元反応(ORR)活性をテストするための高速で直接的なツールとして、ごく最近注目を集めています。ここで紹介する作業では、最近導入されたGDEセットアップが、PEMFC触媒の安定性のベンチマークにも適していることを示しています。得られた結果に基づいて、GDEセットアップは、液体電解質を使用する従来の3電極セットアップよりも加速劣化テスト(ADT)に固有の利点を提供すると主張されています。従来の電気化学セルの固液電解質界面の代わりに、GDEセットアップでは、(加湿)反応性ガス、プロトン交換ポリマー(Nafionなど)、および電極触媒の現実的な3相境界が形成されます。したがって、GDEのセットアップにより、正確な電位制御だけでなく、反応物の雰囲気、湿度、温度を独立して制御することもできます。さらに、同一位置透過型電子顕微鏡(IL-TEM)技術をセットアップに簡単に採用できるため、ベンチマークと機構研究の組み合わせが可能になります。by Google翻訳

2.2. Gas diffusion electrode cell setup.

An in-house developed GDE cell setup was employed in all electrochemical measurements that was initially designed for measurements in hot phosphoric acid [24]. The design used in the present study has been described before [31]. In short, it was optimized to low temperature PEMFC conditions(<100 °C) by placing a Nafion membrane between the catalyst layer and liquid electrolyte; no liquid electrolyte is in direct contact with the catalyst[31]. A photograph of the parts of the improved GDE setup is shown in figure 1.

An advantage of half-cells with a liquid electrolyte - compared to MEA test - is the possibility of performing IL-TEM measurements to analyze the degradation mechanism leading to the loss in active surface area.

Here, we demonstrate that the same is feasible in the GDE setup, and even elevated temperatures can be used; see figure 5.

By placing the TEM grid between the membrane electrolyte and GDL, the IL-TEM method can be applied straightforwardly.

For the demonstration, a catalyst with lower Pt loading (20 wt%) was used to facilitate the ability to follow the change in individual particles.

The typical degradation phenomena, such as migration and coalescence (yellow circles) and particle detachment (red circle), can be clearly seen to occur as consequence of

the load-cycle treatment.

液体電解質を備えた半電池の利点は、MEAテストと比較して、IL-TEM測定を実行して、活性表面積の損失につながる劣化メカニズムを分析できることです。

ここでは、同じことがGDEセットアップでも実行可能であり、高温でも使用できることを示します。 図5を参照してください。

膜電解質とGDLの間にTEMグリッドを配置することにより、IL-TEM法を簡単に適用できます。

デモンストレーションでは、個々の粒子の変化を追跡する能力を促進するために、より低いPt負荷(20 wt%)の触媒が使用されました。

移動と合体(黄色の円)や粒子の剥離(赤い円)などの典型的な劣化現象は、負荷サイクル処理の結果として発生することがはっきりとわかります。

アイオノマーのラマン分析も調べておこう。

Chemical States of Water Molecules Distributed Inside a Proton Exchange Membrane of a Running Fuel Cell Studied by Operando Coherent Anti-Stokes Raman Scattering Spectroscopy

Hiromichi Nishiyama, Shogo Takamuku, Katsuhiko Oshikawa, Sebastian Lacher, Akihiro Iiyama and Junji Inukai, J. Phys. Chem. C 2020, 124, 9703−9711

ABSTRACT:

On the performance and stability of proton exchange membrane fuel cells (PEMFCs), the water distribution inside the membrane has a direct influence.

In this study, coherent anti-Stokes Raman scattering (CARS) spectroscopy was applied to investigate the different chemical states of water (protonated, hydrogen-bonded (H-bonded) and non-H-bonded water) inside the membrane with high spatial (10 μm φ (area) × 1 μm (depth)) and time (1.0 s) resolutions.

The number of water molecules in different states per sulfonic acid group in a Nafion membrane was calculated using the intensity ratio of deconvoluted O−H and C−F stretching bands in CARS spectra as a function of current density and at different locations.

The number of protonated water species was unchanged regardless of the relative humidity (RH) and current density, whereas H-bonded water molecules increased with RH and current density.

This monitoring system is expected to be used for analyzing the transient states during the PEMFC operation.

プロトン交換膜燃料電池(PEMFC)の性能と安定性には、膜内の水の分布が直接影響します。この研究では、コヒーレント反ストークスラマン散乱(CARS)分光法を適用して、膜の内部の水のさまざまな化学状態(プロトン化、水素結合(H結合)、および非H結合水)を高い空間(10μmφ(面積)×1μm(深さ))および時間(1.0秒)分解能で調査しました。ナフィオン膜のスルホン酸基あたりのさまざまな状態の水分子の数は、電流密度の関数として、さまざまな場所で、CARSスペクトルのデコンボリューションされたO-HおよびC-F伸縮バンドの強度比を使用して計算されました。プロトン化された水種の数は、相対湿度(RH)と電流密度に関係なく変化しませんでしたが、H結合水分子はRHと電流密度とともに増加しました。この監視システムは、PEMFC運転中の過渡状態の分析に使用されることが期待されています。by Google翻訳(修正)

coherent anti-Stokes Raman scattering (CARS) spectroscopyは、知らなかった。

3000から3500cm-1のブロードなピーク、O-H伸縮振動を、5つの成分に分けている。これについて調べてみよう。

Peak 1 : 3059 cm-1 : eigen cation H3O+

Peak 2 : 3289 cm-1 : H-bonded to SO3-

Peak 3 : 3371 cm-1 : Zundel cation H5O2+

Peak 4 : 3483 cm-1 : H-bonded to H2O

Peak 5 : 3559 cm-1 : non-H-bonded water

8月11日(水)

水の水素結合を調べた文献がある。

Signatures of the hydrogen bonding in the infrared bands of water

J.-B. Brubach et al., THE JOURNAL OF CHEMICAL PHYSICS 122, 184509 s2005d

Following the above considerations on the OH bond oscillator strength as a function of the number of established H bonds, the three-Gaussian components were assigned to

three dominating populations of water molecules.

The lowest frequency Gaussian (ω=3295 cm−1) is assigned to molecules having H-bond coordination number close to four, as this component sits close to the OH band observed in ice.

The corresponding population is labeled “network water.”

Conversely, the highest frequency Gaussian (ω=3590 cm−1) is ascribed to water molecules being poorly connected to their environment since the frequency position of this component lies close to that of multimer molecules (for instance, ωdimer=3640 cm−1).

This population is called “multimer water.”

In between the two extreme Gaussians lies a third component (ω=3460 cm−1) which we associate with water molecules having an average degree of connection larger than that of dimers or trimers but lower than those participating to the percolating networks.

This type of molecules is referred to as “intermediate water.”

Obviously, this picture describes a situation averaged over time and any one molecule is expected to belong to the three types of population over several picoseconds.

The fact that the intermediate water Gaussian sits very close to the quasi-isobestic point

frequency means, according to our view, that the quasiisobestic point separates water molecules with respect to their involvement or noninvolvement in the long range connective structures, built up by almost fully bonded water molecules.

図3の枠内の右上に示されているように、3つのピーク分離に分離することによって、スペクトルの温度依存性をうまく説明できるとのこと。その結果を、先の5つのピークに分離した結果のうちの波数が近い同定結果を並べて以下に示す。これらの3つのピークは、非常に良く対応しているように思う。

lowest frequency Gaussian (ω=3295 cm−1) : close to the OH band observed in ice

Peak 2 : 3289 cm-1 : H-bonded to SO3-

third component (ω=3460 cm−1) : intermediate water

Peak 4 : 3483 cm-1 : H-bonded to H2O

highest frequency Gaussian (ω=3590 cm−1) : poorly connected to their environment

Peak 5 : 3559 cm-1 : non-H-bonded water

次の論文を読んでみたいが、有料なので、またの機会に!

Mechanism of Ionization, Hydration, and Intermolecular H-Bonding in Proton Conducting Nanostructured Ionomers

Simona Dalla Bernardina, Jean-Blaise Brubach, Quentin Berrod, Armel Guillermo, Patrick Judeinstein§, Pascale Roy and Sandrine Lyonnard

Abstract

Water–ions interactions and spatial confinement largely determine the properties of hydrogen-bonded nanomaterials. Hydrated acidic polymers possess outstanding proton-conducting properties due to the interconnected H-bond network that forms inside hydrophilic channels upon water loading.

We report here the first far-infrared (FIR) coupled to mid-infrared (MIR) kinetics study of the hydration mechanism in benchmark perfluorinated sulfonic acid (PFSA) membranes, e.g., Nafion.

The hydration process was followed in situ, starting from a well-prepared dry state, within unprecedented continuous control of the relative humidity.

A step-by-step mechanism involving two hydration thresholds, at respectively λ = 1 and λ = 3 water molecules per ionic group, is assessed.

The molecular environment of water molecules, protonic species, and polar groups are thoroughly described along the various states of the polymer membrane, i.e., dry (λ ≈ 0), fully ionized (λ = 1), interacting (λ = 1–3), and H-bonded (λ > 3).

This unique extended set of IR data provides a comprehensive picture of the complex chemical transformations upon loading water into proton-conducting membranes, giving insights into the state of confined water in charged nanochannels and its role in driving key functional properties as ionic conduction.

白金触媒の評価に関する論文を見よう!

New approach for rapidly determining Pt accessibility of Pt/C fuel cell catalysts

Ye Peng et al., J. Mater. Chem. A, 9, 13471 (2021)

A rapid method for evaluating accessibility of Pt within Pt/C catalysts for proton exchange membrane fuel cells (PEMFCs) is provided. This method relies on 3-electrode techniques which are available to most materials scientists, and will accelerate development of next generation PEMFC catalysts with optimal distribution of Pt within the carbon support.

Proton exchange membrane fuel cells (PEMFCs) are rapidly gaining entry into many commercial markets ranging from stationary power to heavy duty/light duty transportation.

However, as the technology continues to advance, operating current densities are pushed ever higher while platinum group metal (PGM) loadings are pushed ever lower.

コストダウンと性能向上のためには、触媒量を減らし、電流密度を上げる、必要がある。

As this occurs, new challenges are being discovered which require materials-level advances to overcome.

In particular, as PGM loadings are reduced to a level =<0.125 mg cm-2, significant performance losses have been widely reported.

These losses are most clearly observed at current densities of >1.5 A cm-2 , and have been correlated very strongly with a decrease in ‘roughness factor’ (‘r.f.’, a measure of cm2 Pt per cm2 membrane electrode assembly (MEA)) at the cathode, leading several researchers to attribute this to an oxygen transport phenomenon occurring at each individual Pt site.

‘roughness factor’も意味が分からない。

これは、表面積が小さいVulcan carbonと表面積が大きいKetjen blackの比較データで、白金を添加すると、いずれも表面積が低下している。それは、白金ナノ粒子が黒鉛のナノ空間を塞ぐためであると推測されている。

Vulcan carbonとKetjen blackとで、性能が異なる。左側は、電流密度によって性能が逆転していることがわかる。右側は、Vulcan blackでは湿度依存性が小さいが、Ketjen blackでは湿度依存性が大きいことを示しており、この違いは、白金もアイオノマーも炭素材料の空隙に侵入していることによると推測されている。MEAレベルの実験をすれば、Pt/VCとPt/KBの比較ができるが、通常の研究室では、MEAを作製して試験することは容易ではない。MEA: Membrane electrode assembly(Gas (H2) diffution layer/Anode catalyst layer/PEM(Polymer electrolyte membrane)/Cathode catalyst layer/Gas (O2) diffusion layer)

3D-TEMにより、白金粒子が炭素粒子の外側に付着しているか、内部に侵入しているかを識別できている。

この図がこの論文の成果を示している、Hydrogen underpotential deposition (HUPD) をスイープ速度に対してプロットしたときの直線の傾きが、”Pt accessibility”の指標になっており、傾きが小さいPt/VCの方が、Pt/KBよりもPt accessibleだということが判定出来るとのこと。時間とコストがかかる3D-TEMを実施することや、グラム単位の白金触媒を用意してセル(MEA)を組み立てた試験を実施するよりも、低コスト、短時間で、Pt/Cの性能評価が可能、というのが、この論文の成果のようである。

8月12日(木)

触媒(層)の劣化試験結果に関するデータおよびその解析結果から、触媒層の性能とその劣化過程を推測していくのだが、そもそも、電気化学試験に関する経験がないので、途中で議論についていけなくなる。そこで、今日は、電気化学測定の基礎をまなぶこととしよう。

勉強資料は、分極曲線・サイクリックボルタンメトリ-(2)燃料電池(PEFC)

五百蔵 勉,安田 和明, Electrochemistry, 77,No. 3, 263-268(2009)

1 はじめに

固体高分子形燃料電池(PEFC)の研究では,分極測定とサイクリックボルタンメトリー(CV)は日常的に使用される解析手法である.

しかし,PEFCの研究においては,それらを前回の総論で扱ったような拡散係数や交換電流密度の決定に用いられることはあまりなく,より実用的な側面で利用されることが多い.

例えば,分極測定によってカソードの酸素還元活性化支配電流を求め,CV測定から得られた活性表面積の値で除することで比活性(触媒の単位表面積あたりの電流)を決定し,種々の触媒材料の活性を比活性という基準で比較するといったことが行われる.

また,近年PEFCの耐久性を向上させるための劣化要因解析が活発に行われているが,触媒劣化を加速したり,定量的に評価したりするためにも分極測定やCVのテクニックは必須である.

本稿では,発電可能な膜電極接合体(MEA)を用いた単セル,および回転電極など電解質水溶液を用いたハーフセルを使用した分極測定やCV測定について,データ解析の具体例をいくつか取り上げながら,実用的な解析法について紹介したい.

2 分極測定

2. 1 MEA(単セル)を用いた分極測定

MEAでの分極測定を行うためには,単セルを組み発電可能な状態にセットすることが必要になる.Fig. 1にMEAの代表的な構造の模式図を示す.

(よく見る模式図だが、スケールは意識したことがなかった。厚さわずか1㎜。)

分極曲線の測定法としては,非常にゆっくりとした走査速度でセル電圧を掃引して測定することもあるが,ある電流密度で一定時間保持して得られるセル電圧を,低電流密度から高電流密度まで順次測定していく定常法が一般的に用いられる.これは,電流密度を変更することにより MEA 内でガス・水分・電流などの分布が変化し,これらの状態が定常状態に落ち着くまでには5~10分程度かかるためである.

Fig. 2にPEFC単セルの定常分極曲線(電流-電圧曲線)の概念図を示す.ある負荷電流 i(A)におけるセル電圧 E(V)は下記のように表すことができる.

E=E0-ηa-ηc-ηdiff-i・R (1)

ここで,E0は理論起電圧,ηaはアノード活性化分極,ηcはカソード活性化分極,ηdiffは物質移動による濃度分極,i・Rは抵抗分極(電流とセル内部抵抗の積)である.

燃料が純水素でアノードが白金触媒であればアノード活性化分極が非常に小さいため,活性化分極はほぼカソードに起因すると考えてもよい.

このカソード活性化電圧が大きいことの原因/理由についてちょっと調べてみた。

津島 将司氏らは、高温学会誌, 第 35 巻, 第 5 号(2009 年 9 月)の燃料電池の原理と特徴というタイトルの解説記事に次のように記述している。

PEMFCにおいては、アノード反応は、カソード反応に比べて電子移動がしやすく、アノードにおける活性化過電圧は、ほとんどの場合、無視できるほどに小さい。その一方で、カソードにおいては、アノードから供給される白金中の電子が、素過程をへて最終的には、生成物である水分子内に移動する必要がある。この電気化学反応の素過程は未だ十分には解明されたとは言いがたく、たとえば、反応初期には、酸素分子の白金への吸着、酸素原子とプロトンの結合による吸着 OH の形成、さらに、同様にOOH を形成し、その後、白金側からの電子移動により、水分子として脱離する、などの過程が考えられる。反応の素過程は十分には明らかではなくとも、カソード反応が進行するためには、電子移動を駆動するたの活性化過電圧が必要であり、とくに、PEMFC においてはアノードに比べて大きく、エネルギー損失の主要因となっていることが知られている。

分極曲線・サイクリックボルタンメトリ-(2)燃料電池(PEFC)

2. 2 ハーフセルを用いた分極測定

MEA による分極測定は実際的な方法であるが,一方で測定準備や手順が煩雑であり,またMEA 作製や発電条件など種々のファクターに影響を受ける.例えば触媒材料の評価を

意図した場合でも,単純には触媒自身の特性評価となっていないケースも見受けられる.一方,回転電極(RDE)などのハーフセルを用いた評価では測定が比較的シンプルで再現性も得やすく,触媒活性評価ではよく用いられている手法である.Fig. 3 にRDEを用いたハーフセル測定の装置図を示す.また,RDE では困難な高温や加圧雰囲気での測定では,チャンネルフロー電極を用いる方法なども利用されている4).

Fig. 4(a)にグラッシーカーボン電極に固定した白金担持カーボン(Pt/C 触媒)の酸素還元反応の対流ボルタモグラムを示す.電極電位を下げていくと,E< 1 V で酸素還元電流i が流れ始め,電極回転数に応じた拡散限界電流iLに達した後は一定となる.拡散限界電流に達するまでの電流は,対流による拡散と反応活性化の混合支配となっており,次のような関係で表される.

1/i = 1/ik+ 1/iL (2)

ここで,ik は拡散の影響を除いた活性支配電流である.式(2)を変形するとikは次のように表される.

ik= i・iL/(iL- i) (3)

このようにi とiLより得られる活性支配電流ikを用い,電極活性の評価指標として用いられる比活性is(specific acticity),質量活性im(mass activity)は次のように求められる.

is (mA/cm2Pt)= ik (mA)/Ptの活性表面積(cm2Pt) (4)

im(mA/mgPt)= ik (mA)/Pt担持量(mgPt) (5)

ここで,触媒の活性表面積(Electrochemically activesurface area; ECSA)は後述のCV を用いて決定することができる.

Fig. 4(a)の対流ボルタモグラムより,式(3),(4)を用いて得られるisの電位依存性(ターフェルプロット)をFig.4(b)に示す.式(3)用いた手法は簡便であるが,iLとi の差をとるため,i がiLに近づくと実験誤差やノイズの影響が大きくなる.また,電極活性が低く拡散支配領域が観察できない(iLを決定できない)場合は適用不能となるため,電極回転速度の異なる対流ボルタモグラムをいくつか測定し,Koutecky-Levich プロット(式(6))を用いた解析が必要になる.

1/i = 1/ik+ 1/(0.62nFACD2/3ν−1/6ω1/2) (6)

ここで,nは反応電子数,Fはファラデー定数(96485 C mol−1),Aは電極の幾何面積(cm2),Cは反応化学種濃度(mol cm−3),D は反応化学種の拡散係数 cm2s−1),ν は溶液の動粘度( cm2 s−1), ω は電極の回転角速度( rad s−1) である.Koutecky-Levich プロットは,1/i をω−1/2に対してプロットして得られる直線をω−1/2= 0 に外挿して1/ikを求めるのでiLが明確でない場合でも解析が可能となる.解析の詳細は成書などを参照していただきたい1,5,6).

3 サイクリックボルタンメトリー(CV)

PEFC におけるCV の主要な用途のひとつに活性表面積(ECSA)測定が挙げられる.触媒の活性表面積の大小は電極活性を左右する要因であるので,初期活性・劣化解析のど

の場合においても重要な指標となる.また,PEFC の電極では通常,仕込んだすべての触媒が利用できるわけではなく,同じ触媒材料を使っても触媒層の形成法や作動条件によって触媒電極の中での実際に使える白金の割合(すなわち白金利用率)は変わってくる.CV はハーフセルだけでなく,MEAを用いた測定でも,触媒の活性表面積を簡便に“その場”測定できる解析法である.白金触媒電極の活性表面積評価では,水素吸脱着波の電気量による方法が用いられることが多い.これは,表面積測定のために特別なガスや装置が不要であること,白金表面の水素吸着がアンダーポテンシャル析出(UPD)の機構で進行しマルチレイヤー析出などが起こりにくいこと,清浄な電極では明確なピークが得られることなどによる.

3. 1 ハーフセルを用いたCV 測定

測定は分極測定の場合と同様,触媒をグラッシーカーボン電極に固定化して行う.Fig. 5 に0.1 M 過塩素酸水溶液中での白金電極の典型的なCV の例を示す(電流の符号は酸化電

流をプラスとして表示).

0.4 V よりも卑な電位領域で水素の吸脱着ピークが現れる.水素吸着の電気量QHは,Fig. 5 の斜線で示した電気二重層容量電流(水平線)と水素発生ピーク立ち上がり点(垂直線)で囲まれた領域とする.

3. 2 MEA(単セル)を用いたCV 測定

単セルでの発電に寄与可能な活性表面積を求めるにはMEA を用いて単セルを組み,CV 測定を行う.測定上の注意点は,ハーフセルの場合と同様である.セルを室温付近

(あるいは発電時の温度)に保温し,試験極・対極に加湿窒素ガスを流す.試験セル両極内の空気が完全に置換した後,対極側に加湿水素ガスを流し水素雰囲気とする.

ガス拡散電極を用いるMEA でのCV 測定では,水素発生および発生した水素ガスの酸化電流がハーフセルの場合よりもかなり高い電位(~ 0.1 V)から流れ始めることが多く,水素吸脱着波の電流に重なって現れるため,QH計算の誤差原因になりやすい.このような水素発生の電位シフトは,作用極触媒近傍の水素分圧が低下するために生じると報告されており10),これを防ぐためにはCV 測定時に試験極パージ窒素ガスの流量を絞る,もしくは止めることが有効とされている7,10).

Fig. 6 にPt/C 触媒電極のCV を示す.パターン全体が酸化電流側にシフトする点を除けば,基本的に電解質水溶液中で測定した場合と同様のCV が得られる.このCV シフトは,水素ガスクロスオーバーの影響である.特に薄いフッ素系電解質膜の系ではクロスオーバー水素量が多く(~ 1 mA/cm2の電流に相当),CV の電流値に重畳するクロスオーバー水素の酸化電流も大きくなる.このようにMEA で求めた電極の有効活性表面積(SAMEA)とハーフセル測定などで求めた触媒材料固有の活性表面積(SAcat)との比より白金利用率uPtを決定できる.

uPt=SAMEA/SAcat (8)

uPtを求める手法としては,後述する一酸化炭素(CO)ストリッピング/CO吸着を利用する手法も提案されている11).

3. 3 CV の応用例1 - 触媒加速劣化・解析-

PEFC の耐久性向上は実用化に向けた重要な課題の1 つであり12),劣化要因の1 つである触媒劣化を抑制することが求められている.通常のPEFC の運転条件では,触媒の劣化現象はゆっくりと進行することが多く,材料やシステムの開発を促進するためには,適切な劣化加速手法とその評価法の確立が重要である.このような目的で,ハーフセルおよびMEA に対して,CV などの電位サイクルを用いる触媒劣化加速評価法が,燃料電池実用化推進協議会(FCCJ)から提案されている(ただし,その後の新たな知見を基に評価法は今後改訂される可能性がある)13).

Pt/C 触媒(特にカソード)劣化の主要因は,Pt 微粒子の溶解・凝集および触媒担体劣化と考えられている.Pt 溶解はPt の酸・還元を繰り返すことで加速されることが知られているが14),このような環境はOCV と負荷状態を繰り返す負荷変動時のカソード側の条件によくあてはまる.Fig. 7(a)にFCCJ より提案されているMEA での負荷変動試験条件を示す.窒素雰囲気下0.6 V/0.9 V の間で電位サイクルを行うことでPt の酸化還元を繰り返し,Pt 溶解が加速される条件下での触媒安定性を評価する試験法である.

一方,カーボンブラックなどの触媒担体の劣化は1 V を超える高電位で加速されることが知られている15).通常の状態であれば,燃料電池電極がこのような高い電位にさらされることはないが,例えば起動停止時には逆電流機構とよばれるメカニズムでカソード電位が最大1.5 Vに達することがある16).このような状態を模擬する起動停止試験としてFig. 7(b)に示すような試験条件が提案されている.窒素雰囲気下0.9V/1.3 V の矩形波サイクルを繰り返すことで,起動停止時の異常電位に対する耐性を評価する.

MEA のPt/C カソード触媒電極にFig. 7(b)の起動停止試験を2000サイクル実施した例をFig. 8 に示す.サイクルを重ねるにつれて水素吸脱着波や酸化物層生成・還元ピークが縮小しており,Pt の溶解・凝集が進行していると考えられる.同時に電気二重層電流も徐々に増加し,0.5 - 0.6 V 付近にはカーボン表面の官能基によるレドックスと考えられるピーク対が次第に明確になっていることから,カーボン担体表面の酸化が進行していることも示唆される.CV の水素吸脱着波からECSA を求め,初期値で規格化した値をサイクル数に対してプロットした結果をFig. 8(b)に示す.なお,Fig.8 の例は80 ℃でのCV 測定であるため,水素被覆率低下や水素発生の影響が無視できず正確なECSA 評価は困難になる.しかし,Fig. 8(b)のようなサイクルに伴う相対的な変化を議論することは可能である.ハーフセルを用いる加速劣化試験法については,負荷変動・起動停止試験ともに三角波CV の繰り返しが提案されている13).Fig. 9 にPt/C 触媒のハーフセルによる起動停止試験(CV三角波1.0 V/1.4 V)を10000サイクル実施した例を示す.Fig. 8 のMEA の結果と同様にECSA の減少と共に二重層容量の増大が確認できる.

8月13日(金)

MD シミュレーションを用いたアイオノマー薄膜の構造およびプロトン輸送の解析

小林 光一他著、燃料電池 Vol.18 No.4 2019

概要:固体高分子形燃料電池(PEFC:Polymer Electrolyte Fuel Cell)は車載用電源や定置用電源として盛んに研究・開発が行われてきた。発電時、PEFC 内部ではプロトンや水素、酸素といった様々な物質が輸送されるため、PEFCの性能向上のためには内部の輸送現象を理解する必要がある。本研究では触媒層内アイオノマー薄膜においてアイオ

ノマー膜厚が膜構造とプロトンの輸送特性にもたらす影響について解析を行った。本研究では分子動力学シミュレーションを用いてナノスケールの構造と輸送について評価を行った。本研究の結果より、アイオノマー膜厚が膜内部の水分子の分布に影響を与え、アイオノマー膜厚がおよそ7nm において最も水クラスターの接続性が高く、プロトン

の自己拡散係数も高くなることが分かった。

1.緒言

固 体 高 分 子 形 燃 料 電 池(Polymer Electrolyte FuelCell:PEFC)は今後我が国が水素社会へと舵を切っていく上で、特に車載用や家庭用電源といったシーンにおいてその性能が期待され、盛んに開発が行われている。PEFCを広く普及させるためには単位セルの出力密度向上が欠かせない1)。このためには膜電極接合体(Membrane Electrode Assembly:MEA)において分子レベルで構造と輸送の相関を明らかにする必要があり、特に触媒層においてはガスの拡散性、プロトン・電子の伝導性を考慮した構造

の最適化が求められる2)。

・・・・・・・・・・・・・・・・・・・・

2.計算手法

本研究では炭素壁面上にアイオノマー薄膜が吸着した計算系を作成し、アイオノマー薄膜の膜厚が膜の構造およびプロトン輸送特性に与える影響について分子動力学シミュ

レーションを用いて解析を行った。以下に計算系の構成を述べる。アイオノマー薄膜には Nafion® のモデルを用いた。用 い た Nafion® の 等 価 質 量(Equivalent Weight:EW)は 1146 であり分子構造は図1に示す通りである。また、解析の精度を保ったまま計算負荷を低減するため、CFnの原子群を1原子として扱う United Atom(UA)モデル 14)を用いた。アイオノマー薄膜の膜厚は系内の Nafion®の本数を変化させることで制御した。アイオノマー薄膜の膜厚は膜の含水率などによって±1nm ほど変化するが、系内の Nafion® 本数とアイオノマー膜厚の目安を表1にまとめた。

・・・・・・・・・・・・・・・・・・・・

3.結果と考察

3.1 最大クラスター長

まずアイオノマー薄膜の膜厚と膜内の水クラスター構造の相関を解析するために、クラスターサイズの解析を行った。本計算では水・ヒドロニウムイオンの酸素原子の再隣

接原子間距離が 3.3Å 以内にある集合体を水クラスターと定義した。この 3.3Å という距離は Nafion® バルク膜における水分子の酸素原子間の RDF における第一ピークの終端値である 20)。本研究ではクラスターに含まれる水分子数をクラスターサイズとして定義している。図4にλ =3、14 において膜厚を変化させた時の平均クラスターサイズを示した。λ =14 においてはクラスターサイズが膜厚と共に増加する一方、λ =3においてはクラスターサイズに大きな変化がないことがわかる。一般に膜内のクラスターが成長することで、プロトンの自己拡散係数は増加するが、薄膜の場合はクラスターが壁面垂直方向に成長しても水平方向の自己拡散係数への影響が少ないことが考えられる。

・・・・・・・・・・・・・・・・・・・・

3.2 密度分布

本研究で扱っている炭素壁面上のアイオノマー薄膜の系では、炭素壁面や界面の影響により壁面垂直方向の密度は非一様になっていると考えられる。このような系の密度分

布を求めるため、系内を x×y×z = 1.02 × 0.88 × 1.00Å3の微小なセルに分割してセルごとの密度ρlocal を求めた。またρlocal を壁面水平方向について平均化することによって壁面垂直方向の密度分布を求めた。

・・・・・・・・・・・・・・・・・・・・

3.3 プロトン自己拡散係数

最後にプロトンの輸送特性とアイオノマー薄膜の膜厚の関係を解析するために、プロトンの自己拡散係数を計算した。拡散係数は平均二乗変位(Mean Square Displacement:MSD)から Einstein の式を用いて計算した。MSDの計算式は式 (2)、(3) に示し、Einstein の式を式 (4) に示した。なお、自己拡散係数は図2に示すように炭素壁面に対して水平方向に限定した。これは、電解質膜から触媒までのプロトン輸送を考えたとき、炭素壁面に対して水平方向の輸送が大部分を占めるためである。

・・・・・・・・・・・・・・・・・・・・

4.結言

本研究では MD シミュレーションを用いて PEFC アイオノマー薄膜におけるプロトン輸送特性について解析を行った。プロトンの拡散モデルにaTS-EVB モデルを用いてグロッタス機構による拡散を考慮したプロトン輸送特性の解析を実施した。またアイオノマー薄膜のモデルとして、接触角 90°の炭素壁面を模擬した LJ 壁上に Nafion® 粗視化モデルを吸着させて、アイオノマー薄膜の膜厚を変化させることでプロトン輸送特性や膜構造の変化を解析した。 まずλ= 14 (RH=100%におけるバルクNafion膜中の含水率に相当する)においてはプロトンの自己拡散係数とクラスター接続性に膜厚依存性が少ないことがわかった。また、クラスター長は計算領域の大きさとほぼ等しく、これは高含水率時にクラスターが完全に接続していることを示唆している。さらに膜厚増加とともにクラスターは壁面垂直方向に成長しており、これがλ= 14 の時にプロトン自己拡散係数の膜厚依存性が小さいことの一因であると考えられる。

・・・・・・・・・・・・・・・・・・・・

面白そうな論文がある。ちょっと覗いてみよう。なんか、これは、凄い結果が得られているようだ!

High Pressure Nitrogen-Infused Ultrastable Fuel Cell Catalyst for Oxygen Reduction Reaction, Eunjik Lee et al., ACS Catal., 11, 5525−5531 (2021)

ABSTRACT:

The mass activity of a Pt-based catalyst can be sustained throughout the fuel cell vehicle life by optimizing its stability under the conditions of an oxygen reduction reaction (ORR) that drives the cells. Here, we demonstrate improvement in the stability of a readily available PtCo core−shell nanoparticle catalyst over 1 million cycles by maintaining its electrochemical surface area by regulating the amount of nitrogen doped into the nanoparticles. The high pressure nitrogen-infused PtCo/C catalyst exhibited a 2-fold increase in mass activity and a 5-fold increase in durability compared with commercial Pt/C, exhibiting a retention of 80% of the initial mass activity after 180 000 cycles and maintaining the core−shell structure even after 1 000 000 cycles of accelerated stress tests. Synchrotron studies coupled with pair distribution function analysis reveal that inducing a higher amount of nitrogen in core−shell nanoparticles increases the catalyst durability.

Ptベースの触媒の質量活性は、セルを駆動する酸素還元反応(ORR)の条件下でその安定性を最適化することにより、燃料電池車の寿命全体にわたって維持できます。 ここでは、ナノ粒子にドープされた窒素の量を調整することによってその電気化学的表面積を維持することにより、100万サイクルにわたって容易に入手可能なPtCoコアシェルナノ粒子触媒の安定性の改善を示します。 高圧窒素注入PtCo / C触媒は、市販のPt / Cと比較して質量活性が2倍に増加し、耐久性が5倍に増加し、18万サイクル後に初期質量活性の80%の保持を示しました。 1 000000サイクルの加速応力試験後もコアシェル構造を維持します。 ペア分布関数分析と組み合わせたシンクロトロン研究は、コアシェルナノ粒子に大量の窒素をドープすると触媒の耐久性が向上することを明らかにしています。

INTRODUCTION

Extensive practical applications of the commercial hydrogen fuel cell vehicle have been delayed because of the high cost and limited durability of the membrane electrode assembly (MEA).

One of the main reasons for the high cost of the MEA is the large amount of Pt used to catalyze the oxygen reduction reaction (ORR) at the cathode of the proton exchange membrane (PEM) fuel cell.

In the past decade, several studies investigated ORR electrocatalysts to reduce the cost of the MEA.

One of the main strategies is to add modifiers to the Pt catalyst by changing the structure and morphology of the PtM (metal) alloy catalyst, while others include completely avoiding Pt usage by using various nonprecious M−N−C moiety catalysts.

Although the addition of modifiers can drastically increase catalytic performance, it cannot be sustained for prolonged periods, which is a major factor impeding commercialization.

To date, carbon-supported PtCo alloy nanoparticles have emerged as the best alternative to Pt/C; original equipment manufacturers are already using them in first-generation

hydrogen fuel cell vehicles.

For better Pt utilization efficiency throughout the fuel cell lifetime, an ideal catalyst should be able to maintain its electrochemical surface area (ECSA).

Although earlier studies have corroborated nitrogen’s role in stabilizing the catalyst, high pressures doping of nitrogen in a controlled environment on industrial scale core−shell nanoparticles was not achieved.

先に試して失敗したものを、今回成功させた、ということで、その先導研究を見たら、同じ研究グループのようで、安心した。それが以下の2件。

(25) Kuttiyiel, K. A.; Sasaki, K.; Choi, Y.; Su, D.; Liu, P.; Adzic, R. R. Nitride Stabilized PtNi Core−Shell Nanocatalyst for high Oxygen Reduction Activity. Nano Lett. 2012, 12 (12), 6266−6271.

(26) Kuttiyiel, K. A.; Choi, Y.; Hwang, S.-M.; Park, G.-G.; Yang, T.- H.; Su, D.; Sasaki, K.; Liu, P.; Adzic, R. R. Enhancement of the oxygen reduction on nitride stabilized Pt-M (M = Fe, Co, and Ni) core−shell nanoparticle electrocatalysts. Nano Energy 2015, 13, 442−449.

Thus, in this study, to obtain a highly stable and active ORR catalyst, a highpressure nitriding reactor that can infuse a controlled number of nitrogen (N) atoms into the alloy nanoparticles was developed.

Varying the ratio of N atoms in the PtCo/C core−shell nanoparticles can significantly affect the morphology of the nanoparticles and simultaneously increase their stability

without impacting the activity.

Herein, we report the preparation of N-stabilized PtCo core−shell nanoparticles with ultrastable configurations; the result is a highly durable ORR catalyst that can withstand up to 1 000 000 cycles in accelerated stress tests (ASTs), enabling rapid commercialization of fuel cell vehicles.

To the best of our knowledge, thus far, no catalysts have been reported that can last 1 million cycles.

The best configuration (Pt40Co36N24/C) retained 93% of its ECSA, while its initial half-wave potential decreased by only 6 mV after 30 000 cycles.

This confirms that the proposed configuration is a suitable alternative to the commercial Pt/C catalyst, whose ECSA deteriorated by 40% under similar conditions.

CONCLUSION

We exhibited that nanostructured core−shell materials with high contents of N in their cores can be engineered to sustain harsh and oxidative electrochemical environments during fuel cell operation.

X-ray experiments and PDF analyses revealed that a high N content could protect the Co core against dissolution.

The sustainment of 1 million cycles after harsh and corrosive ASTs without significant dissolution facilitates the potential industrial scale application of the catalysts.

This strategy presents a promising approach to develop cheap and ultradurable core−shell catalysts using other 3d transition metal cores.

8月14日(土)

High Pressure Nitrogen-Infused Ultrastable Fuel Cell Catalyst for Oxygen Reduction Reaction, Eunjik Lee et al., ACS Catal., 11, 5525−5531 (2021)

RESULTS AND DISCUSSION

Carbon-supported PtCo core−shell nanoparticles were prepared by reducing platinum acetylacetonate [Pt(acac)2] and cobalt acetylacetonate [Co(acac)2] via ultrasound-assisted polyol synthesis.

Transmission electron microscopy (TEM) analysis showed that the as-synthesized PtCo nanoparticles exhibited a core−shell structure with an average particle size of ∼2.3 nm (Figure S1).

Scanning TEM (STEM) and energy dispersive X-ray spectroscopy (EDS) confirmed the core−shell structure with 1−2 Pt monolayers on the Co-rich core (Figure 1B−D).

The PtCo core−shell nanoparticles were annealed in an argon/ammonia mixture (N2/NH33: 5/95) at 510 °C in three pressurized environments (1, 40, and 80 bar).

The nanoparticles maintained their core−shell structures and exhibited an increase in the particle size and a change in composition (Figure 1F−H).

As shown in Figure 1E, higher pressure increases the N content in the nanoparticles but ultimately decreases the particle size.

On the basis of the N content in the nanoparticles, the molar ratio changes drastically; the resultant nanoparticles are denoted as Pt52Co48/C, Pt53Co45N2/C, Pt44Co42N14/C, and Pt40Co36N24/C (Table 1).

For all samples, in-house X-ray diffraction (XRD) patterns exhibit the typical face-centeredcubic (fcc) structure, with no phase segregation, corresponding to Pt and its alloys with transition metals (JCPDS, No. 87- 0646) (Figure 1A).

The position of the (111) peak of PtCo/C shifts to a higher angle compared with that of Pt/C, indicating that Co atoms with relatively smaller atomic sizes are incorporated into the Pt lattice, causing compressive strain.

Interestingly, the nitriding pressure directly affects the full width at half-maximum (fwhm) and position of the (111) peak.

In particular, the fwhm increases and the (111) peak position gradually shifts to a lower angle with an increase in the nitriding pressure.

This suggests that the nitriding pressure changes the atomic structure of the catalyst particles while relaxing the lattice mismatch between Pt skin and cobalt nitride core (Table 1).

Furthermore, X-ray photoelectron spectroscopy (XPS) studies indicate that, compared with metallic Pt, the Pt 4f peak in all samples shifts to a lower binding energy (BE), likely owing to the charge transfer from Co to Pt (Figure S2).

Additionally, no peaks (∼399.8 eV) for imides/lactams/amides are observed, indicating that most N in the samples exists in the form of nitrides.

To gain further insights about how the as-synthesized PtCo core−shell nanoparticles maintain their structures while incorporating N atoms, we carried out ab initio molecular

dynamics (AIMD) studies to simulate the formation of the CoN nanophase in the nanoparticle core.

Before the conduction of AIMD, the NH3 molecules were packed into a unit cell with cuboctahedral PtCo nanoparticles under pressures of 1, 10, and 45 bar by use of the COMPASSII force field.

We considered the entropic effect to identify the continuous reaction process incorporated at a finite temperature of 783 K.

In the case of a single PtCo nanoparticle, it is found that N atoms from the NH3 molecules cannot penetrate the Co core even at a high pressure of NH3, as shown in Figure S3 and Movie S1.

Therefore, we tested the case of formation of PtCoN core−shell nanoparticles through a particle growth process involving the agglomeration of the preformed PtCo fragments into nitride cores that are consequently covered by a Pt shell.

The results shown in Figure 2A indicate that this is the likely mechanism of the particle size increasing from ∼2.3 nm for pure PtCo nanoparticles to ∼4.2 nm for Pt53Co45N2/C (Table 1).

Interestingly, AIMD studies are appreciably consistent with the observation that two Pt12Co1 nanoparticles at 10 bar of NH3 (e.g., 28.7 bar at 783 K) can spontaneously merge without any considerable activation barrier.

The simulations indicate the formation of irregular particles with a compressed Pt−Pt distance depending on the location of nearby N atoms, as revealed by the atomic pair distribution function (PDF) analysis and the reverse Monte Carlo modeling (discussed below), thereby increasing the number of N atoms that exist near the Pt sublayer.

In situ Co K edge X-ray absorption near-edge structure (XANES) spectra of Pt52Co48/C, Pt53Co45N2/C, Pt44Co42N14/ C, and Pt40Co36N24/C nanoparticles (Figure 2B) were

obtained in 0.1 M HClO4 at a potential of 0.42 V.

As the N concentration increases, the peak intensity at 7724 eV starts decreasing; the highest peak at 7727 eV is observed at a N concentration of >14 at%.

This change can be ascribed to a change in the electronic structures of Co due to N doping.

As shown in Figure S7, the XANES spectra of CoO (Co2+) and Co3O4 (Co2.67+) exhibit the highest peaks at 7725 and 7729 eV, respectively; meanwhile, the highest peak for Pt40Co36N24/C lies between them.

Thus, the N doping of PtCo catalysts alters the electronic state of Co, resulting in an increase in the oxidation state.

The increase in the oxidation state with an increase in the N content is also supported by the data shown in the inset of Figure 2B; half-step energy values (at 0.5 of the normalized absorption in the XANES spectra) increase with an increase in the N concentration.

Figure 2C shows the in situ Pt L3 edge XANES spectra of the PtCo/C and N−PtCo/C catalysts measured in 0.1 M HClO4 at a potential of 0.42 V.

The intensities of the white lines (first peaks in XANES data) change with the variation in the N content in the N−PtCo/C catalysts.

As shown in the inset of Figure 2C, the intensity increases with increase in N concentration; it is higher than that of a Pt foil but lower than that of the PtCo/C catalyst.

The change in white line intensity is related to the d-band structure in Pt. It is well-known that higher intensities correspond to an increase in d-band vacancy; that in turn lowers the adsorption of the intermediate molecules (such as OOH and OH) on the Pt surface.

Thus, N doping can weaken the interaction of the Pt surface with oxygen, compared with that of bulk Pt.

However, the effect is not as strong as that for the PtCo/C catalyst as the white line intensity for the N−PtCo/C catalysts is lower than that of PtCo/C and varies with the N content.

The XANES data suggest that N doping in N−PtCo/ C alters the electronic states of Co and Pt, resulting in moderate adsorption strength of oxygen on the Pt surface.

To comprehensively understand the particle structure, highenergy synchrotron XRD experiments coupled with atomic PDF analysis were carried out.

Experimental PDFs (Figure S8) were fit with 3D models for the nanoparticles using classical molecular dynamics (MD) simulations and were further refined against the experimental PDF data by employing reverse Monte Carlo modeling.

Cross sections of the models emphasizing the core−shell characteristics of the particles are shown in Figure 3.

The models exhibit a distorted fcc-type structure and reproduce the experimental data in exceedingly good detail (Figure S8).

The bonding distances between the surface Pt atoms and surface Pt coordination numbers extracted from the models are also shown in Figure 3.

As observed, PtCo core−shell particles exhibit large structural distortions (∼1.8%).

The surface Pt−Pt distance in Pt53Co45N2 is 2.739 Å, which is approximately 1.5% shorter than the surface Pt−Pt distances in bulk Pt (2.765 Å).

Furthermore, the surface Pt−Pt distance in PtCo is 2.731 Å, indicating 0.3% more strain compared with the strain observed in the Pt53Co45N2 particles.

This indicates that N relaxes the compressive stress in PtCo core−shell particles.

Moreover, the average surface Pt coordination number for the particles with CoN cores increases and becomes more evenly distributed than in the case of pure Pt particles; that is, the surfaces of N-treated particles appear less rough (fewer undercoordinated sharp edges and corners), which can affect the binding strength of oxygen molecules to the particle surface and accelerate the ORR kinetics.

As expected, the N-treated particles show an increased number of N atoms located near the Pt shell, which explains the increased stability of the nanoparticles compared with those of pure Pt and PtCo particles.

The electrochemical performances of all the catalysts were compared using cyclic voltammetry (CV) curves (Figure S4).

The incorporation of Co into the Pt nanoparticles increases the ECSAs of the catalysts, while that of N into the PtCo nanoparticles decreases their ECSAs (Figure 4A).

A slightly different trend was observed with respect to the specific and mass activities of the catalyst (Figure 4B).

The PtCo/C catalyst with low nitrogen content shows the highest activity among the catalysts; however, an increase in N content does not drastically change its catalytic behavior.

Our study was mainly focused on achieving structural stability of the catalyst.

AST cycles at 0.6−0.95 V and 3 s hold were employed for each catalyst.

All N-infused PtCo/C catalysts showed higher stability and activity compared with commercially available Pt/C and PtCo/C catalysts (Figure S5).

The catalyst with the highest N amount (Pt40Co36N24/C) retained 93% of its ECSA, with a decrease of only 6 mV in its initial half-wave potential after 30 000 cycles.

To further investigate the structural integrity of all the catalysts, we cycled them until the ORR activity decreased to half its initial value.

As observed in Figure 4C, most of the N-infused catalysts retained their structures up to 230 000 cycles; however, the catalyst with the highest amount of N (Pt40Co36N24/C) retained its structural integrity until 1 000 000 cycles and lost just 44 mV from its initial half-wave potential (Figure S6).

Fuel cell (25 cm2) performance tests with 0.1 mg cm−2 Pt content showed promising results (Figure 4D,E). The Pt40Co36N24/C catalyst achieves the U.S.

Department of Energy durability target of a 30 mV voltage drop at 0.8 A cm−2 after 30 000 ASTs (Figure 4H).

Moreover, considering the particle size growth after 30 000 ASTs, the PtCo nanoparticles grew by 41% from their initial average size (Figure 4F), whereas the N-infused PtCo nanoparticles grew by 21%, confirming that N plays a key role in impeding nanoparticle coarsening (Figure 4G).

As previously reported, DFT-based studies clearly support the higher ORR activities of

nitride-stabilized Pt−metal electrocatalysts over Pt/C catalysts.

Their volcano-like trends show that the interactions of Pt/C and PtCo/C with oxygen are significantly stronger and weaker, respectively, compared with those of PtCoN/C.

The outstanding stability of high-pressure N-infused PtCoN/C catalysts can be easily explained on the basis of our resent DFT findings.